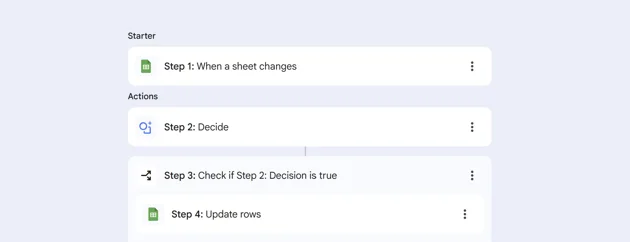

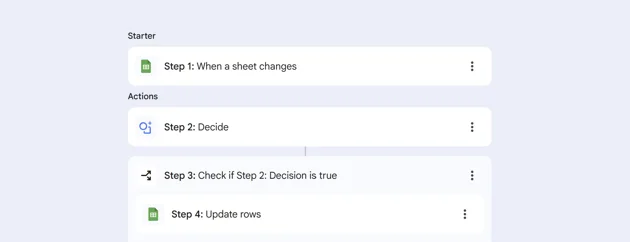

As an Apps Script addict I was excited to experiment with Google Workspace Studio. It's a no code automation tool in the typical flowchart style with the addition of Gemini, so you can use AI for decisions and text manipulation. Unfortunately it failed hard on my first task.

I have a spreadsheet that pulls in Google Fit data, and another one that combines that with other goals to create an overall lifestyle score. Occasionally I copy data from one sheet to the other and automating this has been on my todo list for years with absolutely no chance of getting to done. Workspace Studio should have made this easy.

Building the flow was straightforward, but the steps that write new data were flagged as being in an error state, although no actual errors were flagged in the configuration for the steps. Opening and closing the flow unsettlingly cleared the errors. I started the flow and hoped for the best, but got this error:

"Couldn't complete. Check that the spreadsheet is private and doesn't use the IMPORTRANGE function."

At first I thought this must be inverted and the sheet needed to be shared in some way... but no, it's true, you can only update a private sheet. Which is useful in a trees falling with no one watching kind of way.

I share this mostly because googling the error came up short (and the AI overview is unhelpfully talking about sharing the sheet). Workspace Studio is only a few months old and hopefully this limitation will be fixed. There are some nice features in preview, like webhooks, raising the prospect of handing over to apps script if the flow can't do quite what you need. This should be a nice piece of the AI automation puzzle as it matures.