Catfood WebCamSaver 3.30

Catfood WebCamSaver 3.30 is now available to download. This release contains the latest webcam updates.

Catfood WebCamSaver 3.30 is now available to download. This release contains the latest webcam updates.

I've just released Fortune Cookies for Android 1.30. The last update was in... 2013... it turns out the Android ecosystem has moved on a bit since then and it wasn't even in the Play Store any more as a result. This update has a nice modern theme but is otherwise just an Android version of the classic UNIX fortune command. The database of fortunes is ancient and just running this might get you canceled / fired / etc so use with due caution. If you have this installed it will update as soon as the release is approved.

(Published to the Fediverse as: Fortune Cookies for Android 1.30 #code #fortune #software UNIX style fortune cookies on Android (updated for Android 12) )

Here is a simple apps script to control LIFX WiFi light bulbs. It turns on the bulbs in the morning until a little after sunrise and then again in the evening before sunset until a configurable time. As a bonus it will also rotate between some seasonal colors in the evening on several holidays.

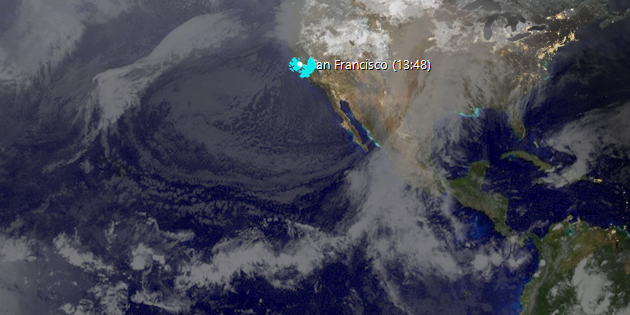

To get this up and running you need a LIFX access token (from here) and a selector. If you have a group of lights called bob this would be group:bob. It could also be a single bulb ID like id:d3b2f2d97452 (more details here). There are some other settings you probably want to change: Latitude and Longitude (if you don't live in San Francisco for some reason), OnHour and OffHour for the time to switch on before sunrise and off after sunset, DaytimeOffsetMins is the minutes to wait after sunrise before switching off and before sunset for switching on, and then finally default color, brightness and fade time.

In Google Drive create a new apps script project and copy in the following code:

Configure the settings at the top in the code editor. Switch to settings (cog at the bottom of the right hand menu) and make sure the script time zone is correct for your location. Back in the code editor run the function nightSchedule() to check that everything is working as expected. This will print the calculated times and then switch the light on or off. Once you're happy with this, go to the Triggers page (clock icon) and set nightSchedule() to run as often as you like (I use every minute).

The current design of the script will update the bulb(s) every time you run it (so if you manually toggle on during the day the script will turn the light off the next time it runs). It would be possible to store the current state in script settings if you want it to behave differently. You could also add or remove holidays and colors to use to celebrate them. If you have other ideas or enhancements please leave a comment below.

Thanks to LIFX for a well documented and easy to use API. Also to sunrise-sunset.org for making a free API that provides sunrise and sunset times for any location.

For many years I had a similar setup using a Philips Hue and IFTTT. Philips pulled support for their proprietary hub and I wasn't about to buy a new one and the IFTTT triggers didn't provide a lot of control over the exact time to switch a bulb on or off. LIFX plus apps script is a much better solution. And it's at least half way towards the build-it-myself smart home philosophy I swore to a couple of years ago.

(Published to the Fediverse as: Control LIFX WiFi light bulbs from Google Apps Script #software #appsscript #drive #philips How to use Google Apps Script to control LIFX WiFi light bulbs and switch lighting on in the morning and evening around sunrise and sunset (plus special color programs for holidays). )

Catfood WebCamSaver 3.28 is available to download.

This release updates the webcam list.

Catfood WebCamSaver is a Windows screen saver that streams live views from random webcams around the world. Download for Windows. You can choose to display 1, 4 or 16 webcams at the same time, and WebCamSaver fully supports multiple monitors. Catfood WebCamSaver was first released in 2004.

When you install Catfood WebCamSaver a shortcut is created to Screen Saver Settings. You can search for Catfood WebCamSaver Settings and run the shortcut, or open Screen Saver Settings directly (on Windows 10, Start -> Settings -> Personalization -> Lock screen -> Screen saver settings). From Screen Saver Settings make sure that Catfood WebCamSaver is selected and then click Settings. On the Windows settings dialog you can also choose how many minutes to wait before starting the selected screen saver and if the login screen should be displayed when the screen saver exits.

Catfood WebCamSaver Settings has three tabs:

Under Description choose if the description of the webcam and the local time if available should be displayed. Click Font to pick the font, color, and optional outline color to use for the description and time.

Under Display choose the number of webcams to show at the same time (1, 4 or 16), how often to switch to a new webcam and how often to refresh the webcam image.

Under Offline Display you can choose a fallback screensaver to start if the Internet is not available.

Please note that recent releases of WebCamSaver will delete and recreate the webcam list on install. This means you will lose any customization of the list on upgrade. See the import and export instructions below to back up any changes you have made.

The Webcams list shows the current library of webcams. Click the column headers to sort (and click again to sort in the opposite direction). You can enable to disable a specific cam using the checkbox in the list.

Click Add to enter details for a new webcam.

When a webcam in the list is selected click Open to launch the web page associated with the webcam, Edit to update details or Delete to remove it from the list entirely.

Click Delete All to remove all webcams from the list.

Click Enable All or Disable All to toggle the checkboxes for all webcams in the list.

You can save your customized list by clicking Export and restore it by clicking Import.

If you prefer day (or night) scenes you can set a local time range here. If this option is enabled webcams where the time zone is unknown will never be displayed.

Click Proxy Settings to configure a proxy server.

Catfood WebCam Browser is a companion application installed with WebCamSaver. When you open WebCam Browser it will start showing a random webcam from the library.

Pick Cam opens a window where you can select a webcam to view from the full list. You can sort the list (click any column header, click again to sort the opposite direction). There is also a filter that only shows webcams matching text that you enter.

Random Cam will select a new webcam at random from the list and start streaming images.

Control Cam launches a URL where you can control the currently selected webcam (if available).

About shows the version and copyright information for the application.

Catfood WebCamSaver requires version 4.8 of the .NET Framework. You almost certainly have this already and the installer will download it if necessary. The installer for Catfood WebCamSaver is digitally signed. You may get a warning about it being infrequently used which you will need to ignore to install.

For support please visit this post, check to see if your issue has already been addressed and if not leave a new comment.

“We LOVE your screen saver. I've seen others try this idea, but never quite seemed to get it right.” Buddy King

“The other day I watched as a middle aged couple in Japan took photos of the changing orange leaves along a calm street. I saw another couple, some tourists, digging for their sunscreen on the beach in Kona, HI.” MIT Center for Future Civic Media Blog

“I've been fascinated by your ‘saver’! It strikes me that so many different places & cultures seem to share some common traits, so that each saver appears to be both familiar and exotic.” Tom

“Very nice screensaver. One of the best I've seen.” Andy Cant

“As a live webcam afficionado, I believe your product is the best, offering high quality resolution, reliable transmissions, a nice blend of everyday street scenes with landscape views, and multiple cam shots filling the entire widescreen page.” Oliver Garrison

“The new version is wonderful. I love being able to sort by age (so I'll never miss a new addition), and I love how easy it is to select my favourites. Basically, I love everything about it. It's so much fun, and brings so much variety to the screen. I have my favourite cams - The streets of Narbonne, that crazy Japanese wind-speed screen, the flag-filled car-park of a Dutch retail park - But it's always fun to leave this screensaver on random and let it transport me to a completely new place.” James

“I have to say that I am enjoying the sights that I am able to see on the web cam. The views are very clear and they are never the same shots. I like being able to show off my new screensaver. Thank you.” Isaac Adel

“Hi, I just love your web cam, I enjoy looking around the world at places I have never been to. Whoever thought up this idea, gets a 10/10 from me.” Lynne

Catfood WebCamSaver 3.22 is available to download. This release updates the webcam list.

Catfood WebCamSaver 3.31 is available to download. This includes the latest update to the webcam list.

Catfood WebCamSaver 3.30 is now available to download. This release contains the latest webcam updates.

Catfood WebCamSaver 3.29 is now available to download.

This release updates the webcam list and includes a selection of new webcams provided by a long-time user.

Catfood WebCamSaver 3.28 is available to download.

This release updates the webcam list.

Catfood WebCamSaver 3.27 is available to download.

This release contains the latest webcam list and will upgrade any current set of webcams. I'm currently releasing updates for WebCamSaver every three months with the latest cams.

Catfood WebCamSaver 3.26 is available to download.

This update includes the latest list of webcams. It has also been updated to use .NET 4.8 which means it will install with no additional downloads needed on Windows 10 and 11. The installer is now signed. You might get a warning from Defender about the program being infrequently used, you will need to ignore this to install. Lastly WebCamSaver will now automatically check for updates so you'll get a desktop notification when a new version is available.

Catfood WebCamSaver 3.25 is available for download. This release includes the latest webcam list.

Catfood WebCamSaver 3.24 is available for download. This release includes an update to the default list of webcams.

(Previously: Catfood WebCamSaver 3.21)

Catfood WebCamSaver 3.21 is available for download.

This update fixes a screensaver install issue on recent versions of Windows 10 and has the latest webcam list.

Catfood WebCamSaver 3.20 is available for download.

WebCamSaver is a Windows screensaver that shows you a feed from open web cameras around the world. It also includes WebCamBrowser which allows you to explore the directory and launch a URL where you can control each cam.

Version 3.20 includes an updated list of working webcams - if you are an existing user this will replace any current list the first time you run the updated version.

(Published to the Fediverse as: Catfood WebCamSaver #code #webcamsaver #catfood #webcam #screensaver #webcam #software #webcams #screensaver A Windows screen saver that displays live webcams from around the world. Supports 1, 4 or 16 webcams per monitor (multi-display setups fully supported). Free download. )

Catfood Earth uses satellite imagery and data feeds to create live wallpaper for Windows and Android. Earth shows you the current extent of day and night combined with global cloud cover (clouds updated every hour). You can also choose to display time zones, places, earthquakes, volcanoes and weather radar. The Windows version includes a screen saver. Catfood Earth was first released in 2003. Version 4 was fully remastered to support 4K resolution on both platforms.

Catfood Earth can be quickly configured with three different themes. After installing, run Catfood Earth Settings and click one of the buttons to activate a theme. If you want to customize further then visit the layers and advanced tabs of the settings app, described in detail below. The themes are:

Natural is the default theme. This combines day, night and cloud cover. The day image uses NASA's blue marble next generation monthly global composite. combined daily so you can see changes in snow, ice and even vegetation throughout the year. Next, global cloud cover is blended on top of the day image (updated hourly). Finally Catfood Earth computes the extent of day and night and blends in NASA's city lights image. You can update the image as frequently as every minute and watch the terminator between day and night move across the planet and change shape with the passing seasons. The video below shows how all three components of the natural theme change over the course of a year:

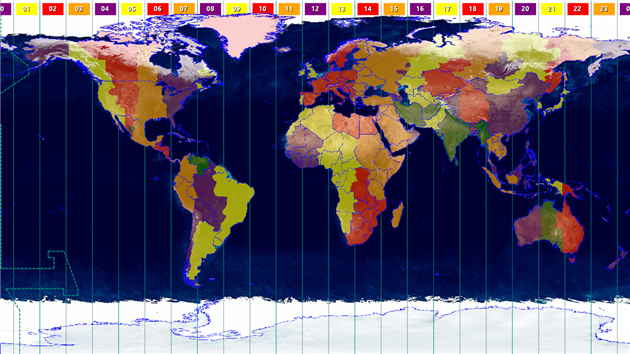

The time zones theme shows the current time zone in each country and optionally international zones as well. This theme is highly configurable, you can choose to show borders, pick colors, show hours or hours and minutes and control transparency. Catfood Earth can also show the local time for anywhere on Earth, see the places layer for more details. The video below shows how the time zones change by country from May 2021 to June 2022:

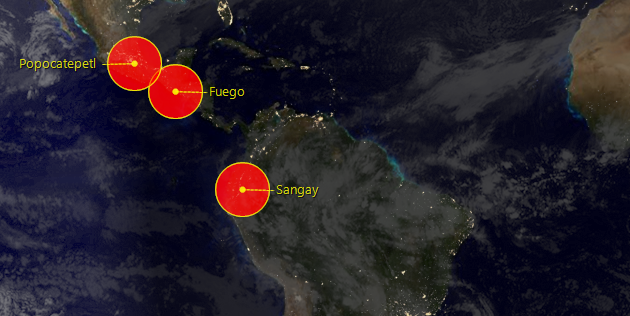

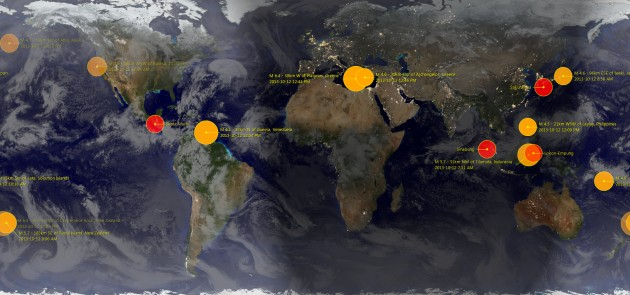

The final theme, earthquakes, uses a different daytime map with a more geological look and adds earthquakes for the past 24 hours. Size is proportional to magnitude and the quakes fade out over 24 hours so the most recent are highly visible. Again it's possible to customize extensively via layers. You can show active volcanoes and control the colors used, transparency and which magnitudes to plot. The video below shows a month of earthquakes as seen in Catfood Earth:

The layers tab is where you can fully customize Catfood Earth. Use the checkboxes to activate layers and then click each layer to access detailed settings. Layers are listed in the order that they are drawn (i.e. clouds are drawn on top of the day map, and then night is drawn on top of the clouds and so on).

The first layer is the daytime map. Catfood Earth ships with two defaults. The first uses NASA's blue marble 2 monthly images, merged with a more attractive ocean image. Catfood Earth interpolates these images daily so the extent of snow/ice and vegetation will change in a subtle way throughout the year. The other default is a less colorful map that I feel works well with earthquakes and volcanoes. You can also browse to and select a different image if you don't like the defaults.

All the images and data layers in Catfood Earth use an equirectangular projection so if you are using custom images you should make sure that they use the expected projection.

Clouds come from the Space Science and Engineering Center at the University of Wisconsin-Madison. These images are web mercator by default, my website downloads the global composite every hour and transforms the image to equirectangular for Catfood Earth. If you have a different source for clouds you can enter a custom URL. You can also set the color and transparency of the clouds layer. The defaults are designed to make the clouds fairly subtle.

The last natural layer is the nighttime map. Catfood Earth ships with NASA's 2016 update to city lights. This layer is drawn on top of daytime and clouds where it is currently nighttime (updated as often as every minute, see advanced options below). You can set the transparency of the layer and also the width used to blend the terminator between day and night (in degrees). It's also possible to select a different nighttime image if you prefer.

The time zones layer is based on the IANA time zone database. You can change the colors used to show the time zones and control the transparency of national and international zones. You can also choose if minutes are displayed with the time zone legends at the top of the screen. This is a good layer to use in combination with the Places layer and the Political Borders layer (both described below).

This layer displays political borders in a configurable color.

The places layer shows a list of places optionally combined with the local time for each place. I've used this to show office locations around the world (handy to know if it's a good time to call someone) and also to track my geographically dispersed family. Catfood Earth ships with a default list of major cities. You can add to this, or remove everything and start from scratch. You can even export and import the list to make it easy to share between different devices. For each place you just need to provide the latitude, longitude and time zone (from the same source as the time zones layer).

Earthquake data is downloaded from the USGS feed and can show global quakes from magnitude 2.5 up. There is a lot you can fine tune for this layer - The fill and accent colors, number of past hours to show (up to 24), the transparency range (older earthquakes are more transparent) and the minimum magnitude to plot. You can also choose whether to show descriptions or not and the order in which to plot the quakes (time based, or size based which makes it easier to see what's going on when a lot of quakes hit a small area).

Volcano data comes from the Smithsonian Institution's Global Volcanism Program. This shows currently active volcanoes around the world. You can set the fill and accent colors, the transparency to use and whether to show descriptions or not.

The final layer shows weather radar from the National Weather Service. This layer is US only. Enter a list of one or more radar stations to display, separated by commas. You can find your local station(s) at the NWS web site.

The advanced tab of Catfood Earth Settings controls how the final image is displayed and also allows you to render sequences of images (this is how I made most of the videos above).

Set how often the image is updated in minutes, as frequently as every minute which is handy if you're displaying the local time for places. You can also skip the first update when Catfood Earth loads (potentially useful at boot time when Windows is starting a lot of programs).

There are some settings relating to text display: a global font size and an option to use drop shadows.

Earth can be centered to a specific longitude (default 0) or to a specific time. If you choose a time then the image will rotate throughout the day to keep that local time at the center of the screen.

It is also possible to set a specific output size and location for the wallpaper. The default is to fill the screen.

Click Render Images to output a sequence of images. If you use a proxy server click Proxy Settings to configure.

Finally there are some troubleshooting options that you might be asked to use if you need help with the product.

Render Images allows you to create frames from Catfood Earth based on current settings. This is useful for making animations based on how the Earth image changes over time. Set a start date/time (UTC), how to increment time between frames, the number of frames to generate, the size and an output folder. Earth will then create a sequence of JPEGs. This works well with computed layers like day/night and time zones. It will not work well with live data like clouds and earthquakes. I have made videos from live data as well, but this involves storing many images and/or data points and is not currently supported in Catfood Earth.

Catfood Earth is a little different on Android. Because phones are mostly used in a portrait orientation the wallpaper shows the full range of latitude but only a segment of longitude depending on the screen size of the device. The wallpaper is the same as the natural theme in Catfood Earth for Windows (blue marble 2 day map, clouds, city lights night map) with the optional addition of earthquakes.

Run Catfood Earth and click Settings to configure. The slice of Earth displayed is based on a central longitude. By default this will update to your phone's current location. You can also set a manual location. -90 degrees works well to center North America. In addition to the central longitude you can opt to show recent earthquakes, control cloud and night transparency and the width of the terminator between day and night. There are advanced options to ignore screen size changes and reuse the most recent image, and to paint black under the menu bar which is useful on some devices.

After configuring Catood Earth just select it as your current wallpaper. You can do this from device settings or tap the Set Wallpaper button in the Catfood Earth app.

Catfood Earth for Android is optimized for battery life. It will update around every ten minutes and so it might take that long to see any changes to settings. Clouds are downloaded every hour. If using your phone location it accesses the last location fix established by other apps.

Catfood Earth for Windows requires version 4.8 of the .NET Framework. You almost certainly have this already and the installer will download if necessary. The installer for Catfood Earth is digitally signed. You may get a warning about it being infrequently used which you will need to ignore to install.

For support please visit this post, check to see if your issue has already been addressed and if not leave a new comment.

I was looking for a ‘live’ time zone clock for my desktop. Catfood Earth offers by far the best time zone tool around and a whole lot more. Beautiful, functional and thoughtfully designed, it brings the world alive. Martin Pratt

While Catfood Earth is a ‘fun’ product, it has been indispensable to me and our global business from a professional standpoint. It lets me know when I can communicate with clients around the globe and the weather I might be headed into on client visits. All the features are outstanding and there is no product available in its class. Joe D. Clayton

Thanks for such a great piece of software! It is so rare to find such a well-thought product, and then still with customer service that even surpasses it! Already well worth the cost — and all the unexpected features to boot! Thanks!! Hilton Kaplan

This is the best application of earth real live images for desktop I've experienced! Julie Branch

Catfood Earth 4.40 is now available to download.

With this release Catfood Earth is 20 years old! This update includes version 2023c of the Time Zone Database and the following bug fixes.

The National Weather Service changed one letter in the URL of their one hour precipitation weather radar product. It needs to be BOHA instead of BOHP. Presumably just checking that data consumers are paying attention? Weather radar is working again.

Not to be left out the Smithsonian Institution Global Vulcanism Program has decided to drop the www from their web site. The convention here is to redirect but they're content with just being unavailable at the former address. Recent volcanoes are working again as well.

The final fix is to the locations layer. Editing a location was crashing. This was due to a new format in the zoneinfo database that was not contemplated by the library that I use. As far as I can tell this isn't maintained any more since the death of CodePlex. While working on this update I started using GitHub Copilot, their AI assistant based on GPT 3.5. I was amazed at how helpful it was figuring out and then fixing this rather fiddly bug. The locations layer is back to normal, and I have regenerated all the time zone mapping as well.

Catfood Earth for Android now supports random locations. The slice of Earth displayed will change periodically throughout the day. You can still set a manual location or have Catfood Earth use your current location. Install from Google Play, existing users will get this update over the next few days.

Catfood Earth 4.30 is available to download.

This update fixes a couple of problems with the screensaver. It now has improved support for multiple monitors (the image will repeat rather than stretch). There was a problem with the screensaver not starting in some cases. This should now be fixed. After upgrading to 4.30 please find and run the 'Catfood Earth Screensaver Settings' shortcut to configure the screensaver.

4.30 also includes version 2022d of the time zone database.

Catfood Earth for Android 4.20 adds support for Material You, the custom color scheme introduced in Android 12. By default every wallpaper update will also subtly change your system color palette. You can override this from settings -> Wallpaper & style and switch back to basic colors if you don't like the Catfood Earth colors. This update was surprisingly painful. You can install Catfood Earth from Google Play, or for existing users it will automatically update in the next day or so.

Catfood Earth 4.20 is available to download.

This update includes the 2021d time zone database.

After procrastinating for far too long I have also migrated to .net 4.8 and renewed my code signing certificate. You'll get at least one less warning when installing and you no longer need to enable .net 3.5 on Windows 10 which was a pain. This shouldn't make any difference but please let me know if you have any trouble installing or running this version.

Catfood Earth 4.10 is available for download.

The National Weather Service updated their weather radar API. The weather radar layer has changed a bit, you can enter one or more (comma separated) weather station IDs and Earth will show one hour precipitation for all of them. You used to be limited to a single station but with more options for the rader layer to display. Let me know if you love or hate the new version.

4.10 also includes the latest 2021a time zone database.

(I'm sure there are great reasons for it, but the 'new' NWS API is an XML document per station that links to a HTML folder listing of images where you can enjoy parsing out the latest only to download a TINY GZIPPED TIFF file FFS).

Catfood Earth 4.01 is available for download.

The timezone database has been updated to 2020a. There is also a small fix to a problem with screensaver installation on recent versions of Windows 10.

Catfood Earth for Android 4.00 is available for download and is updating through the Google Play Store.

As with the 4.00 update for Windows all images have been remastered to 4K resolution. Earth for Android has also been updated to better support Android 10 (updates are faster and the settings layout looks much better). You'll need to grant location permission in settings to have Earth automatically center on your current location. It's also possible to set a center longitude manually (I find -90 works well for centering most of the Americas).

Catfood Earth 4.00 is available for download.

The main change is that all of the images shipped with Catfood Earth have been remastered to 4K resolution. This includes NASA Blue Marble 2 monthly images (which Catfood Earth interpolates daily) and the 2016 version of Black Marble (city lights at night). The Catfood Earth clouds service has been updated to full 4K resolution as well.

Earth 4.00 also includes an update to the 2019c version of the Time Zone Database.

As well as providing desktop wallpaper and a screensaver, Catfood Earth can render frames for any time and date. To celebrate the release of 4.00 I created the 4K video below which shows all of 2019, 45 minutes per frame, 9,855 frames. You'll see the shape of the terminator change over the course of the year (I always post the seasonal changes here: Spring Equinox, Summer Solstice, Autumnal Equinox, and Winter Solstice). If you watch closely you'll also see changes in snow and ice cover and even vegetation over the course of the year.

Catfood Earth 3.46 is now available for download. Catfood Earth for Android 1.70 is available in the Google Play Store and will update automatically if you already have it installed.

This follows hot on the heels of the last release as the new clouds layer service running on this blog can update far more frequently than the source used prior to 3.45. You will now get a fresh helping of clouds every hour! Unrelated to this release I've improved the quality of the clouds image as well. If you're interested you can read about this in exhaustive detail here.

Catfood Earth 3.45 is now available to download. Catfood Earth for Android 1.60 is available on Google Play and will update automatically if you have it installed.

I only just released 3.44 with some timezone updates but in the past week the location I had been using for global cloud cover abruptly shut down. If you like up to date clouds you'll want to install the new versions as soon as possible. With this update I'm building a cloud image every three hours and serving through this blog (and thankfully CloudFlare) so any further changes should not require a code release.

Catfood Earth 3.44 is now available to download.

The timezone database has been updated to 2018i.

Eric Muller's shapefile map of timezones is no longer maintained and so Catfood Earth has switched to Evan Siroky's timezone boundary builder version.

A bug that could cause all volcanoes to be plotted at 0,0 depending on your system locale has been fixed.

Catfood Earth 3.43 updates the timezone database to 2018e. The big change is that North Korea is moving back to UTC +09 today (May 5, 2018). The time zones layer in Catfood Earth shows the current time in each zone at the top of your screen and color codes each country and region. You can also display a list of specific places (either an included list of major cities or your own custom locations).

Catfood Earth is dynamic desktop wallpaper for Windows that includes day and night time satellite imagery, the terminator between daytime and nighttime, global cloud cover, time zones, political borders, places, earthquakes, volcanoes and weather radar. You can choose which layers to display an how often the wallpaper updates.

Catfood Earth 3.42 is a small update to the latest (2016d) timezone database and the latest timezone world and countries maps from Eric Muller. If you use the political borders, places or time zones layers in Catfood Earth then you'll want to install this version.

Download the latest Catfood Earth.

Catfood Earth 3.41 fixes a problem that was preventing the weather radar layer from loading.

I've also updated to the latest (2015g) time zone database and the latest time zone map from Eric Muller.

Download the latest Catfood Earth.

I've just released Catfood Earth 3.40 for Windows and 1.50 for Android.

Both updates fix a problem with the clouds layer not updating. The Android update also adds compatibility for Android 5 / Lollipop.

Also, Catfood Earth for Android is now free. I had been charging $0.99 for the Android version but I've reached the conclusion that I'm never going to retire based on this (or even buy more than a couple of beers) so it's not worth the hassle. Catfood Earth for Windows has been free since 3.20.

Catfood Earth fans will want to download Catfood Earth 3.30. This update fixes a problem where volcanoes were all plotted in the middle of the screen.

Catfood Earth for Android now includes the option to display earthquakes (magnitude 5.0 or higher) from the USGS feed.

Catfood Earth 3.20 for Windows is now available for download. This update fixes a change in the feed address for the earthquakes layer. I've also switched to using the new NASA Black Marble night-time image and 3.20 includes the latest time zone and political border data.

Earth for Android has been updated to 1.30. This includes the new Black Marble image.

I recently upgraded to the HTC One which has a transparent notification bar. This makes it hard to see notification icons when using Catfood Earth as your wallpaper, at least in the summer when it's always light at high latitudes and your white icons are displayed on top of polar ice and clouds.

Catfood Earth for Android 1.20 fixes this with an option to paint black under the notification bar. That's the only update other than the latest Xamarin runtime.

I’ve just released Catfood Earth for Android 1.10. You can control the center of the screen manually (the most requested new feature) and also tweak the transparency of each layer and the width of the terminator between day and night. It also starts a lot faster and has fewer update glitches. Grab it from Google Play if this looks like your sort of live wallpaper.

I’ve just released Catfood Earth for Android. It’s my second app created with Xamarin’s excellent toolkit. Being able to develop in C# allowed me to reuse a lot of code from the Windows version of Catfood Earth. The Android version doesn’t include all the same layers (yet) but it’s got the main ones – daytime (twelve different satellite images included, based on NASA’s Blue Marble Next Generation but with some special processing to make them look better), nighttime (city lights, shaded to show nighttime and the terminator between day and night) and a clouds layer that is downloaded every three hours.

My main worry had been that this would suck the phone battery dry, but after a fair amount of optimization it doesn’t even register on the battery consumption list. Grab it now from Google Play ($3.99, Android 2.2 or better).

(Published to the Fediverse as: Catfood Earth #code #earth #catfood #android #c# #xamarin #monodroid #volcanoes #software #windows #weather #radar #timezone #clouds #video #screensaver Live wallpaper for Windows and Android that combines Blue Marble 2 NASA satellite imagery with live feeds of clouds, earthquakes, volcanoes and more. )

Six 4K images a day at 24 frames per second (so each second is four days) from April 18, 2019 to April 17, 2020:

I made a version of this video a couple of years ago using xplanet clouds. That was lower resolution and only had one frame per day so it's pretty quick. This version uses the new 4K cloud image I developed for Catfood Earth just over a year ago. I've been patiently saving the image six times a day (well, patiently waiting as a script does this for me). It's pretty amazing to see storms developing and careening around the planet. The still frame at the top of the post shows Dorian hitting Florida back in September.

(Published to the Fediverse as: 4K One Year Global Cloud Timelapse #code #software #video #timelapse #animation #clouds #earth Animation of a year of global cloud cover from April 18, 2019 to April 17, 2020. You can see storms developing and careening around the planet. Rendered from six daily 4K images. )

Warning - I no longer recommend using this script to backup Google Photos. The Google Photos API has too many bugs that Google doensn't seem interested in fixing. My personal approach at this point is to use Google Takeout to get a periodic archive of my most recent year of photos and videos. I have a tool that does some deduplication and puts everything in year/month folders. See Photo Sorter for more details.

Google has decided that backing up your photos via Google Drive is 'confusing' and so Drive based backup is going away this month. I love Google Photos but I don't trust it - I pull everything into Drive and then I stick a monthly backup from there onto an external drive in a fire safe. There is a way to get Drive backup working again using Google Apps Script and the Google Photos API. There are a few steps to follow but it's pretty straightforward - you should only need to change two lines in the script to get this working for your account.

First two four caveats to be aware of. Apps Script has a time limit and so it's possible that it could fail if moving a large number of photos. You should get an email if the script ever fails so watch out for that. Secondly and more seriously you could end up with two copies of your photos. If you use Backup and Sync to add photos from Google Drive then these photos will be downloaded from Google Photos by the script and added to Drive again. You need to either upload directly to Google Photos (i.e. from the mobile app or web site) or handle the duplicates in some way. If you run Windows then I have released a command line tool that sorts photos into year+month taken folders and handles de-duplication.

One more limitation. After a comment by 'Logan' below I realized that Apps Script has a 50MB limitation for adding files to Google Drive. The latest version of the script will detect this and send you an email listing any files that could not be copied automatically.

And a fourth limitation after investigating a comment by 'Tim' it turns out there is a bug in the Google Photos API that means it will not download original quality video files. You get some lower resolution version instead. Together with the file size limit this is a bit of a deal breaker for most videos. Photos will be fine, but videos will need a different fix.

On to the script. In Google Drive create a new spreadsheet. This is just a host for the script and makes it easy to authorize it to access Google Photos. Select 'Script editor' from the Tools menu to create a new Apps Script project.

In the script editor select 'Libraries...' from the Resources menu. Enter 1B7FSrk5Zi6L1rSxxTDgDEUsPzlukDsi4KGuTMorsTQHhGBzBkMun4iDF next to 'Add a library' and click add. This will find the Google OAuth2 library Pick the most recent version and click Save.

Select 'Project properties' from the File menu and copy the Script ID (a long sequence of letters and numbers). You'll need this when configuring the Google Photos API.

In a new window open the Google API Console, part of the Google Cloud Platform. Create a new project, click Enable APIs and Services and find and enable the Google Photos API. Then go to the Keys section and create an OAuth Client ID. You'll need to add a consent screen, the only field you need to fill out is the product name. Choose Web Application as the application type. When prompted for the authorized redirect URL enter https://script.google.com/macros/d/{SCRIPTID}/usercallback and replace {SCRIPTID} with the Script ID you copied above. Copy the Client ID and Client Secret which will be used in the next step.

Go back to the Apps Script project and paste the code below into the Code.gs window:

Enter the Client ID and Client Secret inside the empty quotes at the top of the file. You also need to add an email address to receive alerts for large files. There is a BackupFolder option at the top as well - the default is 'Google Photos' which will mimic the old behavior. You can change this if you like but make sure that the desired folder exists before running the script. Save the script.

Go back to the spreadsheet you created and reload. After a few seconds you will have a Google Photos Backup menu (to the right of the Help menu). Choose 'Authorize' from this menu. You will be prompted to give the script various permissions which you should grant. After this a sidebar should appear on the spreadsheet (if not choose 'Authorize' from the Google Photos Backup menu again). Click the authorize link from the sidebar to grant access to Google Photos. Once this is done you should be in business - choose Backup Now from the Google Photos Backup menu and any new items from yesterday should be copied to the Google Photos folder in Drive (or the folder you configured above if you changed this).

Finally you should set up a trigger to automate running the script every day. Choose 'Script editor' from the Tools menu to re-open the script, and then in the script window choose 'Current project's triggers' from the Edit menu. This will open yet another window. Click 'Add Trigger' which is cunningly hidden at the bottom right of the window. Under 'Choose which function to run' select 'runBackup'. Then under 'Select event source' select 'Time-driven'. Under 'Select type of time based trigger' select 'Day timer'. Under 'Select time of day' select the time window that works best for you. Click Save. The backup should now run every day.

The way the script is written you'll get a backup of anything added the previous day each time it runs. If there are any duplicate filenames in the backup folder the script will save a new copy of the file with (1) appended in front of the filename. Let me know in the comments if you use this script or have any suggestions to improve it.

(Published to the Fediverse as: How to backup Google Photos to Google Drive automatically after July 2019 with Apps Script #code #software #photos #appsscript #google #sheets #drive #api Easy to configure apps script to continue backing up your Google Photos to Google Drive after the July 2019 change. You just need to change two lines of the script to get this running with your Google Photos account. )

In TechCrunch today Josh Constine gets friend portability for Facebook almost right:

"In other words, the government should pass regulations forcing Facebook to let you export your friend list to other social networks in a privacy-safe way. This would allow you to connect with or follow those people elsewhere so you could leave Facebook without losing touch with your friends. The increased threat of people ditching Facebook for competitors would create a much stronger incentive to protect users and society."

The problem is having a list of friends does me no good at all when none of them are on Google Plus, Diaspora or whatever.

What we need is legislation that forces interoperability. I can share with my friends via an open protocol, and Facebook is forced to both send and receive posts from other networks. This would actually create an opportunity for plausible competition in a way that a friend export could never do. Social networking should work like email, not CompuServe.

(Published to the Fediverse as: Facebook Interoperability #marketing #software #facebook Social networking should work like email, not CompuServe. We need legislation to mandate interoperability between platforms. )

I have been experimenting with word2vec recently. Word2vec trains a neural network to guess which word is likely to appear given the context of the surrounding words. The result is a vector representation of each word in the trained vocabulary with some amazing properties (the canonical example is king - man + woman = queen). You can also find similar words by looking at cosine distance - words that are close in meaning have vectors that are close in orientation.

This sounds like it should work well for finding related posts. Spoiler alert: it does!

My old system listed posts with similar tags. This worked reasonably well, but it depended on me remembering to add enough tags to each post and a lot of the time it really just listed a few recent posts that were loosely related. The new system (live now) does a much better job which should be helpful to visitors and is likely to help with SEO as well.

I don't have a full implementation to share as it's reasonably tightly coupled to my custom CMS but here is a code snippet which should be enough to get this up and running anywhere:

The first step is getting a vector representation of a post. Word2vec just gives you a vector for a word (or short phrase depending on how the model is trained). A related technology, doc2vec, adds the document to the vector. This could be useful but isn't really what I needed here (i.e. I could solve my forgetfulness around adding tags by training a model to suggest them for me - might be a good project for another day). I ended up using a pre-trained model and then averaging together the vectors for each word. This paper (PDF) suggests that this isn't too crazy.

For the model I used word2vec-slim which condenses the Google News model down from 3 million words to 300k. This is because my blog runs on a very modest EC2 instance and a multi-gigabyte model might kill it. I load the model into Word2vec.Tools (available via NuGet) and then just get the word vectors (GetRepresentationFor(...).NumericVector) and average them together.

I haven't included code to build the word list but I just took every word from the post, title, meta description and tag list, removed stop words (the, and, etc) and converted to lower case.

Now that each post has a vector representation it's easy to compute the most related posts. For a given post compute the cosine distance between the post vector and every other post. Sort the list in ascending order and pick however many you want from the top (the distance between the post and itself would be 1, a totally unrelated post would be 0). The last line in the code sample shows this comparison for one post pair using Accord.Math, also on Nuget.

I'm really happy with the results. This was a fast implementation and a huge improvement over tag based related posts.

Updated 2023-01-29 22:00:

I have recently moved this functionality to use an OpenAI embeddings API integration.

(Published to the Fediverse as: Better related posts with word2vec (C#) #code #software #word2vec #ithcwy #ml How to use word2vec to create a vector representation of a blog post and then use the cosine distance between posts to select improved related posts. )

Export Google Fit Daily Steps, Weight and Distance to a Google Sheet

Accessing Printer Press ESC to cancel

Monitor page index status with Google Sheets, Apps Script and the Google Search Console API

Download a Sharepoint File with GraphServiceClient (Microsoft Graph API)

Upgrading from word2vec to OpenAI

Scanning from the ADF using WIA in C#

International Date Line Longitude, Latitude Coordinates

Automate Google PageSpeed Insights and Core Web Vitals Logging with Apps Script

Enable GZIP compression for Amazon S3 hosted website in CloudFront

User scoped custom dimensions in Google Analytics 4 using gtag