Stable Diffusion Animation of Scale Parameter

Here is a video created by animating the scale parameter in Stable Diffusion:

This uses the prompt "a photo of San Francisco shrouded by fog 8k landscape trending on artstation", seed 56345, dimensions 896x448 and frames that range from scale 0.0 to 21.9. The higher the scale the more literally your prompt should be interpreted.

Interesting at scale 0 Stable Diffusion seems to think San Francisco is a diverse crowd of people protesting something (and it's not wrong):

From there is settles on a foreground quickly, experiments with various numbers of Transamerica Pyramids and then starts throwing in various permutations of the Golden Gate Bridge. It eventually decides that the Transamerica Pyramid belongs in Marin and that the bridge is so iconic that the city is probably filled with red aircraft warnings lights.

I think the versions a third of the way in are the best.

Related Posts

- A black lab chases a Roomba and then things start to get weird...

- Stable Diffusion Watches a Security Camera - a short horror movie

- Style Transfer for Time Lapse Photography

- Stable Diffusion Global Stereotypes

- Coastal

(Published to the Fediverse as: Stable Diffusion Animation of Scale Parameter #etc #video #ml #stablediffusion Animation of San Francisco in fog created by Stable Diffusion and increasing the scale parameter. )

Adobe Super Resolution Timelapse (Crystal Growth)

This is the third batch of crystals from our National Geographic Mega Crystal Growing Lab kit (see the previous two).

I have been meaning to experiment with Adobe's Super Resolution technology and this seemed like a good project for it. The video below has the same timelapse sequence repeated three times. If you're not bored of crystals growing yet you soon will be (don't worry, this is the last for now). The first version is a 2x digital zoom - a 960x540 crop of the original GoPro footage. The second version uses Super Resolution to scale up to full 1920x1080 HD. Finally I added both side by side so you can try to tell the difference.

Super Resolution generates a lot of data. I tried to use it once before for a longer sequence and realized that I didn't have enough hard drive space to process all the frames. In this case I upscaled the 960x540 JPEGs which went from 500K to 13MB, quite a jump. I don't see a huge difference in the side by side video though and wouldn't go through the extra steps based on these results. It's possible that going to JPEG before applying super resolution didn't help with quality. It's also possible that Adobe doesn't train its AI on a large array of crystal growth photos so I can imagine it might work better for a more traditional landscape timelapse. I'll test both these hypotheses together the next time I 10x my storage.

Related Posts

(Published to the Fediverse as: Adobe Super Resolution Timelapse (Crystal Growth) #timelapse #crystals #ml #video Time lapse using Adobe Super Resolution to upscale each frame before creating the video (source is crystals growing over two weeks with photos every five minutes). )

Deep Fake Rob

I deep faked myself! Using that MyHeritage deep nostalgia thing. I'm not convinced.

Related Posts

(Published to the Fediverse as: Deep Fake Rob #etc #ml #video I make a deep fake video of myself using the MyHeritage deep nostalgia tool. )

Style Transfer for Time Lapse Photography

TensorFlow Hub has a great sample for transferring the style of one image to another. You might have seen Munch's The Scream applied to a turtle, or Hokusai's The Great Wave off Kanagawa to the Golden Gate Bridge. It's fun to play with and I wondered how it would work for a timelapse video. I just posted my first attempt, four different shots of San Francisco and I think it turned out pretty well.

The four sequences were all shot on an A7C, one second exposure, ND3 filter and aperture/ISO as needed to hit one thousandth of a second before fitting the filter. Here's an example shot:

I didn't want The Scream or The Wave, so I asked my kids to pick two random pieces of art each so I could have a different style for each sequence:

The style transfer network wants a 256x256 style image so I cropped and resized the art as needed.

The sample code pulls images from URLs. I modified it to connect to Google Drive, iterate through a source folder of images and write the transformed images to a different folder. I'm running this in Google Colab which has the advantage that you get to use Google's GPUs and the disadvantage that it will disconnect, timeout, run out of memory etc. To work around this the modified code can be run as many times as needed to get through all the files and will only process input images that don't already exist in the output folder. Here's a gist of my adapted colab notebook:

One final problem is that the style transfer example produces square output images. I just set the output to 1920x1920 and then cropped a HD frame out the middle of each image to get around this.

Here's a more detailed workflow for the project:

I usually shoot timelapse with a neutral density filter to get some nice motion blur. When I shot this sequence it was the first time I'd used my filter on a new camera/lens and screwing in the filter threw off the focus enough to ruin the shots. Lesson learned - on this camera I need to nail the focus after attaching the filter. As I've been meaning to try style transfer for timelapse I decided to use this slightly bad sequence as the input. Generally for timelapse I shoot manual / manual focus, fairly wide aperture and ISO 100 unless I need to bump this up a bit to get to a 1 second exposure with the filter.

After shooting I use LRTimelapse and Lightroom 6 to edit the RAW photos. LRTimelapse reduces flicker and works well for applying a pan and/or zoom during processing as well. For this project I edited before applying the style transfer. The style transfer network preserves detail very well and then goes crazy in areas like the sky. Rather than zooming into those artifacts I wanted to keep them constant which I think gives a greater sense of depth as you zoom in or out.

Once the sequence is exported from Lightroom I cancel out of the LRTimelapse render window and switch to Google Colab. Copy the rendered sequence to the input folder and the desired style image and then run the notebook to process. If it misbehaves then Runtime -> Restart and run all is your friend.

To get to video I use ffmpeg to render each sequence. For this project at 24 frames per second and cropping a 1920x1080 frame from each of the 1920x1920 style transfer images.

Then DaVinci Resolve to edit the sequences together. I added a 2 second cross dissolve between each sequence and a small fade at the very beginning and end.

Finally, music. I use Filmstro Pro and for this video I used the track Durian.

Here's the result:

Related Posts

- Coastal

- West Portal Timelapse of Timelapses

- World Webcams 2

- Slow Camera for Android

- West of West Portal

(Published to the Fediverse as: Style Transfer for Time Lapse Photography #code #ml #tensorflow #drive #python #video How to apply the TensorFlow Hub style transfer to every frame in a timelapse video using Python and Google Drive. )

Better related posts with word2vec (C#)

I have been experimenting with word2vec recently. Word2vec trains a neural network to guess which word is likely to appear given the context of the surrounding words. The result is a vector representation of each word in the trained vocabulary with some amazing properties (the canonical example is king - man + woman = queen). You can also find similar words by looking at cosine distance - words that are close in meaning have vectors that are close in orientation.

This sounds like it should work well for finding related posts. Spoiler alert: it does!

My old system listed posts with similar tags. This worked reasonably well, but it depended on me remembering to add enough tags to each post and a lot of the time it really just listed a few recent posts that were loosely related. The new system (live now) does a much better job which should be helpful to visitors and is likely to help with SEO as well.

I don't have a full implementation to share as it's reasonably tightly coupled to my custom CMS but here is a code snippet which should be enough to get this up and running anywhere:

The first step is getting a vector representation of a post. Word2vec just gives you a vector for a word (or short phrase depending on how the model is trained). A related technology, doc2vec, adds the document to the vector. This could be useful but isn't really what I needed here (i.e. I could solve my forgetfulness around adding tags by training a model to suggest them for me - might be a good project for another day). I ended up using a pre-trained model and then averaging together the vectors for each word. This paper (PDF) suggests that this isn't too crazy.

For the model I used word2vec-slim which condenses the Google News model down from 3 million words to 300k. This is because my blog runs on a very modest EC2 instance and a multi-gigabyte model might kill it. I load the model into Word2vec.Tools (available via NuGet) and then just get the word vectors (GetRepresentationFor(...).NumericVector) and average them together.

I haven't included code to build the word list but I just took every word from the post, title, meta description and tag list, removed stop words (the, and, etc) and converted to lower case.

Now that each post has a vector representation it's easy to compute the most related posts. For a given post compute the cosine distance between the post vector and every other post. Sort the list in ascending order and pick however many you want from the top (the distance between the post and itself would be 1, a totally unrelated post would be 0). The last line in the code sample shows this comparison for one post pair using Accord.Math, also on Nuget.

I'm really happy with the results. This was a fast implementation and a huge improvement over tag based related posts.

Updated 2023-01-29 22:00:

I have recently moved this functionality to use an OpenAI embeddings API integration.

Related Posts

- Upgrading from word2vec to OpenAI

- Geotagging posts in BlogEngine.NET

- BlogEngine.NET most popular pages widget using Google Analytics

- Migrating from Blogger to BlogEngine.NET

- Correlation is not causation but...

(Published to the Fediverse as: Better related posts with word2vec (C#) #code #software #word2vec #ithcwy #ml How to use word2vec to create a vector representation of a blog post and then use the cosine distance between posts to select improved related posts. )

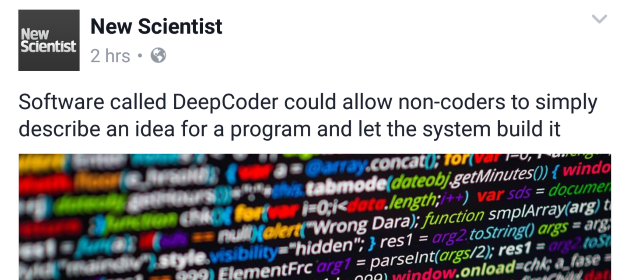

DeepCoder

New Scientist hypes DeepCoder:

"Software called DeepCoder could allow non-coders to simply describe and idea for a program and let the system build it"

Coding is nothing but simply describing an idea for a program. As simply as possible but no simpler. Won't be really useful without an AI product manager.

Related Posts

- Sod Searle And Sod His Sodding Room

- NailMathAndScienceFirst.org

- OpenAGI, or why we shouldn't trust Open AI to protect us from the Singularity

- Three reasons the dream of a robot companion isn't over

- TDCommons and the Future of Patent Law

(Published to the Fediverse as: DeepCoder #etc #ml The problem of fully describing a thing is often most of the work involved in building it. )

Sod Searle And Sod His Sodding Room

Marcus du Sautoy, writing on BBC News, brings up Searle's Chinese Room in Can computers have true artificial intelligence?

Searle's argument is that someone who speaks no Chinese exchanges notes with a native speaker through a system that informs him which note to respond with. The Chinese speaker think's he's having a conversation but the subject of the experiment doesn't understand a word of it. It's a variant of the Turing Test and while the 'room' passes the test the lack of understanding on the part of the subject means that Artificial Intelligence is impossible. The BBC even put together a three part illustration to help you understand.

I learned about the room at university and I didn't fall for it then. Du Sautoy, to be fair, expresses some skepticism but it makes up about a third of an article on AI, which is unforgivable.

In determining if Searle's room is intelligent or not you must consider the entire system, including the note passing mechanism. The person operating the room might not understand Chinese but the room as a whole does. The Chinese room is like saying a person isn't intelligent if their elbow fails to get a joke. It's the AI equivalent of Maxwell's demon, a 19th century attempt to circumvent the second law of thermodynamics.

Every time you get a Deep Thought or a Watson the debate about the possibility of strong AI (as in just I) resurfaces. It's not a technical question, it's a religious one. If you believe we're intelligent for supernatural reasons then it's valid to wonder if AI is possible (and you might want to stop reading now). If not then the fact that we exist means that AI might be difficult, but it's not impossible and almost certainly inevitable.

The problem is that teams at IBM and Google cook up very clever solutions in a limited domain and them people get excited that a chess computer or a trivia computer can eventually 'beat' a human at one tiny thing.

Human intelligence wasn't carefully designed, it's the slow accretion of many tiny hacks, lucky accidents that made us slowly smarter over time. If we want this type of intelligence it's highly likely that we're going to have to grow it rather than invent it. And when true AI finally arrives I'll bet that we won't understand it any better than the organic kind.

Previously: At the CHM...

Photo Credit: Stuck in Customs cc

Related Posts

- OpenAGI, or why we shouldn't trust Open AI to protect us from the Singularity

- Can I move to a Better Simulation Please?

- DeepCoder

- Three reasons the dream of a robot companion isn't over

- Rob 2.0

(Published to the Fediverse as: Sod Searle And Sod His Sodding Room #etc #software #searle #chinese #room #ml Why Searle's Chinese Room is the wrong way to think about artificial intelligence. )